Debugging RAG Chatbots and AI Agents with Sessions

When does your AI agent start hallucinating in the multi-step process? Have you noticed consistent issues with a specific part of your agentic workflow?

These are common questions developers face when building AI agents and Retrieval Augmented Generation (RAG) chatbots. Getting reliable responses and minimizing errors like hallucination is incredibly challenging without visibility into how users interact with your Large Language Model.

In this blog, we will delve into how to use Helicone’s Sessions feature to help you maintain context, reduce errors, and improve the overall performance of your LLM apps, and other tools to address these pitfalls to create more robust and reliable AI agents.

What you will learn:

- What are AI agents?

- How do they work?

- Challenges of debugging AI agents

- Effective debugging tools

- How different industries debug AI agents using Sessions

First, what are AI agents?

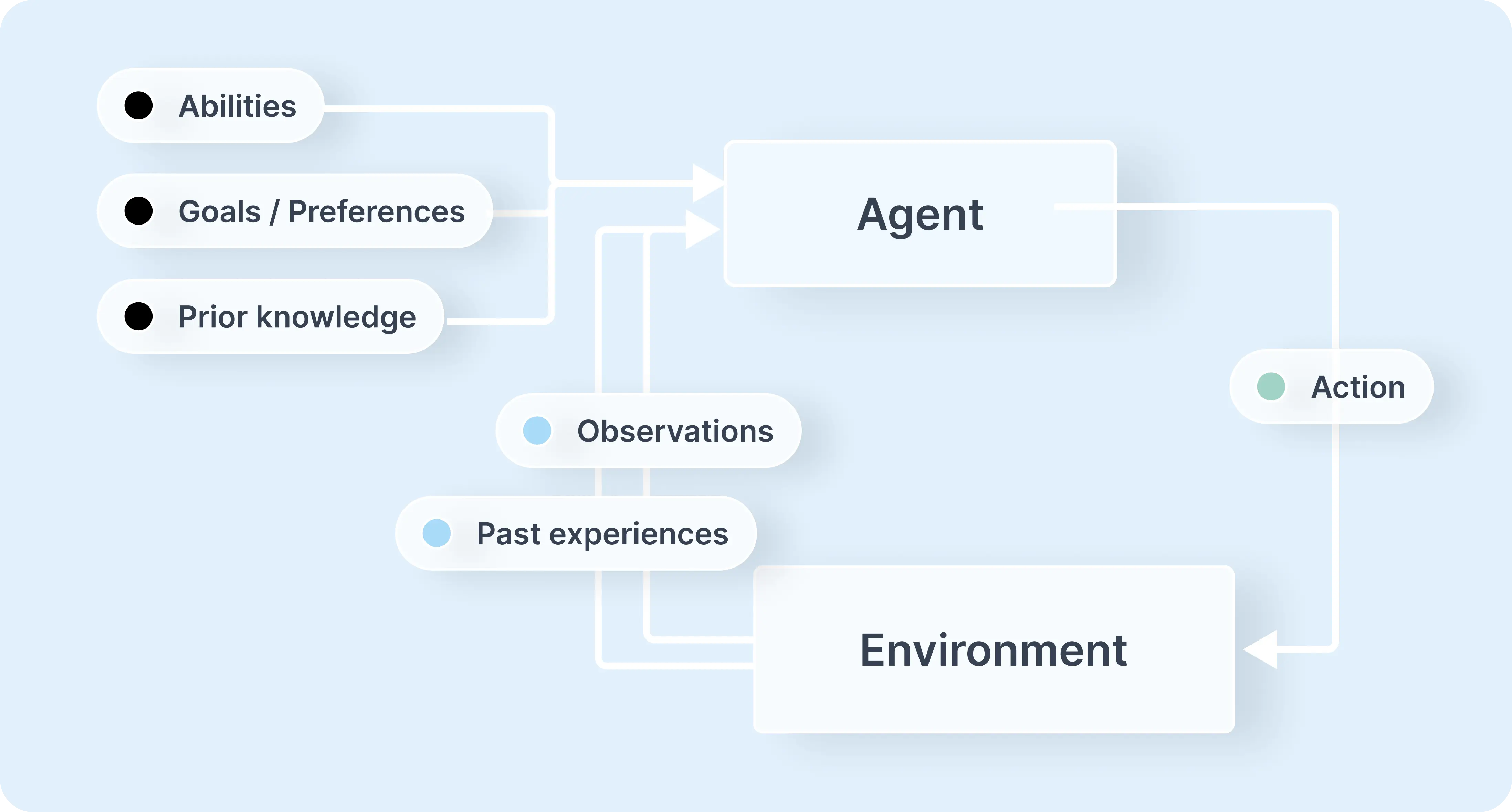

An AI agent is a software program that autonomously performs specific tasks using advanced decision-making abilities. It interacts with its environment by collecting data, processing it, and deciding on the best actions to achieve predefined goals.

Types of AI Agents

Copilots

Copilots assist users by providing suggestions and recommendations. For example, when writing code, a copilot might suggest code snippets, highlight potential bugs or offer optimization tips, but the developer decides whether to implement these suggestions.

Autonomous Agents

Autonomous agents perform tasks independently without human intervention. For example, it can handle customer inquiries by identify issues, access account information, perform necessary actions (like processing refunds or updating account details), and respond to the customer. They can also escalate to a human agent if they encounter problems beyond their current capabilities.

Multi-Agent Systems

Multi-agent systems involve interactions and collaboration between multiple autonomous agents to achieve a collective goal. These systems have advantages like dynamic reasoning, the ability to distribute tasks, and better memory for retaining information.

What is Retrieval-Augmented Generation?

Retrieval-Augmented Generation (RAG) is an advanced framework that allows the agent to incorporate information from external knowledge bases (e.g., databases, documents, articles) into the response.

RAG significantly improves the functionality of AI agents by accessing the most recent data based on keywords, semantic similarity, or other advanced search techniques, and use it to generate more accurate, personalized, and context-specific responses.

Four Core Components of AI Agents

Unlike traditional software programs, AI agents differ by autonomously performing tasks based on rational decision-making principles. Typically, AI agents have four components:

- Planning

- Tool / Vector Database Calls

- Perception

- Memory

Planning

AI agents has the ability to plan and sequence actions to achieve specific goals. The integration of LLMs has significantly improved their planning capabilities, allowing them to formulate more sophisticated and effective strategies.

Tool / Vector Database Calls

Advanced AI Agents often interact with these external tools, APIs, and services through function calls, allowing them to handle complex operations such as:

- Fetching real-time information from APIs (e.g., weather data, stock prices).

- Utilizing translation services to convert text between languages.

- Performing tasks like image recognition or manipulation through specialized libraries.

- Running custom scripts to automate specific workflows or computations.

Perception

By perceiving and processing information from their environment, AI agents become more interactive and context-aware. This sensory information can include visual, auditory, and other types of data, enabling the agents to respond appropriately to different environmental cues.

Memory

AI agents have the capacity to remember past interactions and behaviors, including previous tool usage and planning decisions. They store these experiences and can engage in self-reflection to inform future actions. This memory component provides continuity and allows for continuous improvement in their performance over time.

Challenges of Debugging AI agents

⚠️ Their decision making process is complicated.

AI agents base their decisions on many inputs from diverse data sources (i.e. user interactions, environmental data, and internal states). While traditional software follow explicit instructions, AI agents learn and make decisions through patterns and correlations identified in the data. Their adaptive behavior makes their decision paths non-deterministic and harder to trace.

⚠️ No visibility into their internal states.

AI agents function as “black boxes” and understanding how it transforms inputs into outputs is not straightforward. When the agent interacts with external services, APIs or other agents, the behavior can be unpredictable.

⚠️ Context builds up over time, so do errors.

AI agents often make multiple dependent vector database calls within a single session, making it difficult to trace the data flow. They can also operate over extended sessions, where an early error can have cascading effects, making it difficult to identify their original source without proper session tracking.

Tools for Debugging AI Agents

While understanding the internal workings of AI models is inherently challenging, and traditional logging methods often lack the granular data to effectively debug complex behaviors, there are tools to help streamline the debugging process:

1. Helicone open-source

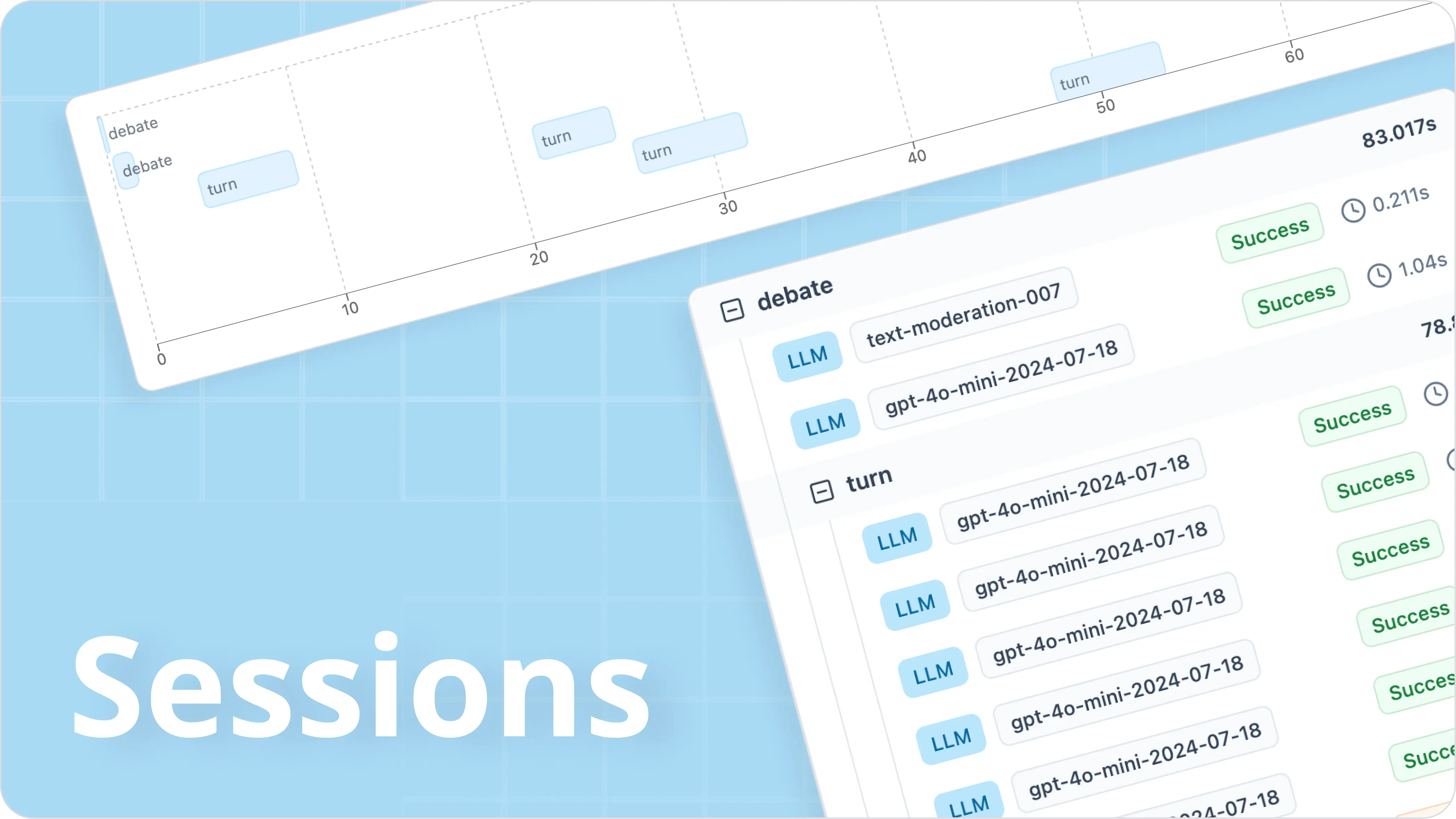

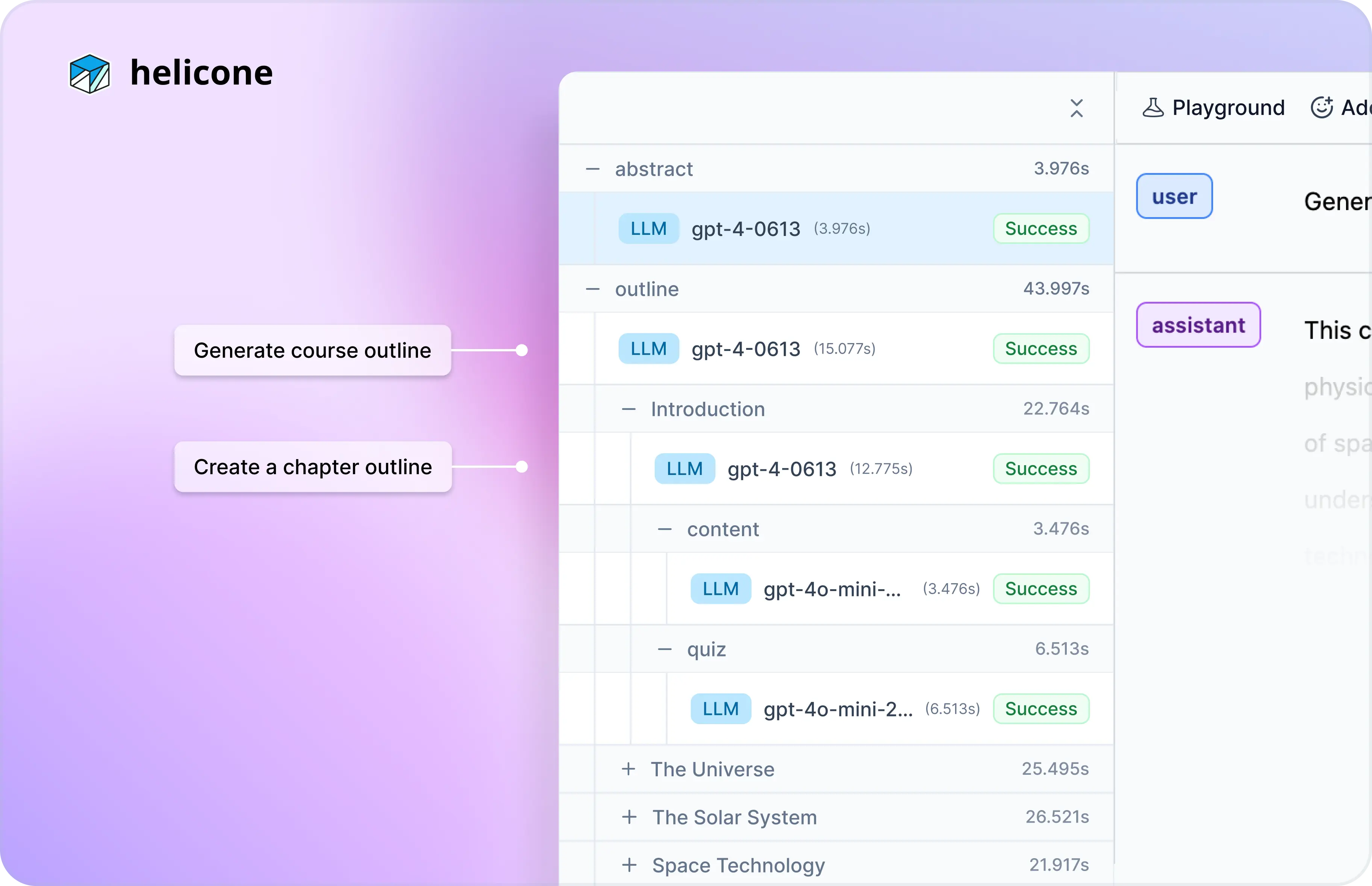

Helicone’s Sessions is an ideal option for teams looking to intuitively group related LLM calls, trace nested agent workflows and quickly identify issues. Helicone is also catered to advanced uses such as tracking requests/response/metadata to the Vector Database, along with other observability features.

2. Langfuse open-source

Langfuse is ideal for developers who prefer self-hosting solutions for tracing and debugging AI agent workflows. It offers similar features similar to Helicone’s and is well-suited for projects that don’t require scalability or robust, cloud-based support.

3. AgentOps open-source

AgentOps can be a good choice for teams looking for a comprehensive solution to debug AI agents. Despite a less intuitive interface, AgentOps offers comprehensive features for monitoring and managing AI agents.

4. LangSmith

LangSmith is ideal for developers working extensively with the LangChain framework as its SDKs and documentation are designed to support developers within this ecosystem best.

5. Braintrust

Braintrust is a good choice for those focusing on evaluating AI models. It’s an effective solutions for projects where model evaluation is a primary concern and agent tracing is a secondary need.

6. Portkey

Portkey is designed for developers looking for the latest tools to track and debug AI agents. It introduces new features quickly, great for teams needing the newest suite of features and willing to face the occasional reliability and stability issues.

How Industries Debug AI Agents Using Sessions

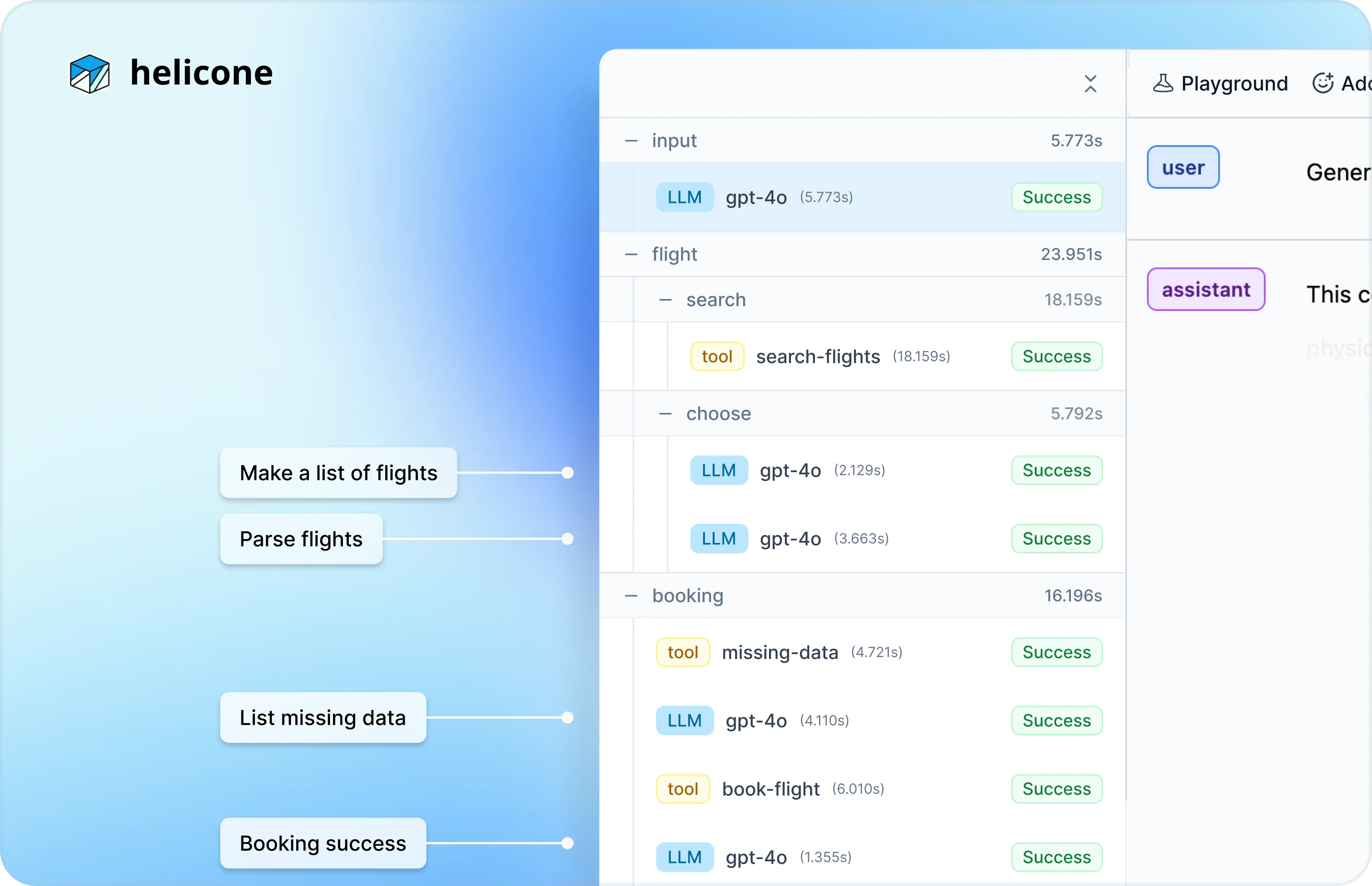

Travel: Resolving Errors in Multi-Step Processes

Challenge

Travel chatbots assist users through flights, hotels bookings and car rentals. Errors can easily happen due to data parsing issues or integration problems with third-party services. Users are often left frustrated or have incomplete bookings.

Solution

Sessions provide a complete trace of the booking interaction, allowing developers to pinpoint exactly where users encounter problems. If users frequently report missing flight confirmations, session traces can reveal whether the issue stems from input parsing errors or glitches with airline APIs, enabling targeted fixes.

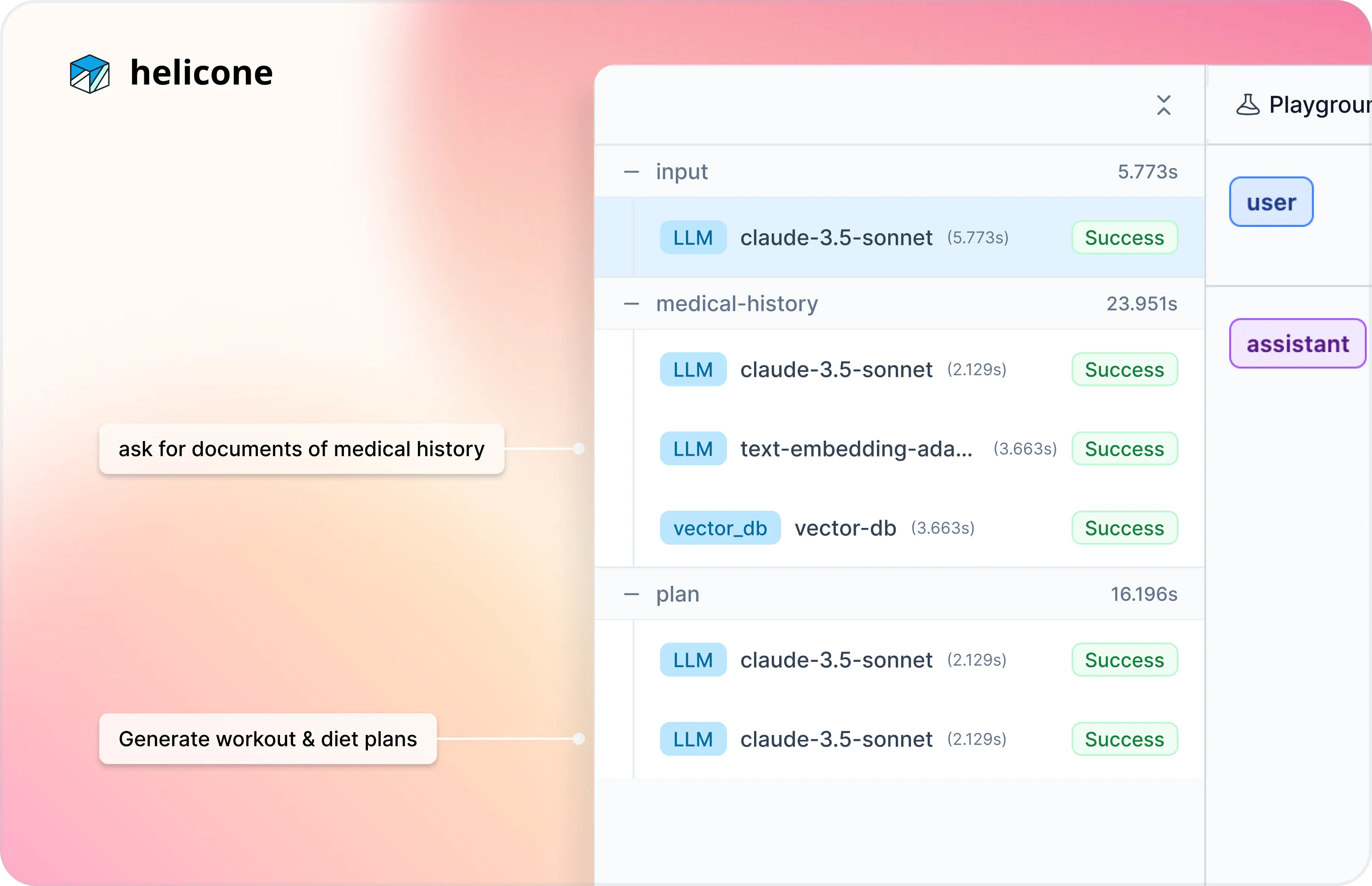

Health & Fitness: Understanding User Intent for Personalization

Challenge

Health and fitness chatbots needs to accurately interpret user’s asks to offer personalized workout plans and dietary advice. Misinterpretation leads to generic suggestions and unhappy users who will abandon the chatbot instantly.

Solution

The session data reveals user preferences to help developers adjust chatbot responses. If users ask about strength training over cardio often in the session logs, developers can tweak the prompt for the chatbot to provide more relevant strength training programs.

Education: Generating Content and Ensuring Quality

Challenge

AI agents that creates customized learning materials need to generate comprehensive and accurate lessons. Errors or incomplete information leads to poor learning experiences.

Solution

Sessions trace how the agent interprets user requests and generates course content. Data can reveal where the agent misunderstood topics or failed to cover key concepts. Developers can then fine-tune the prompts to generate more thorough content and ensure that it is well-suited for the student’s learning level.

Becoming Production-Ready

We’re already seeing AI agents in action across various fields like customer service, travel, health and fitness, as well as education. However, for AI agents to be truly production-ready and widely adopted, we need to continue to improve their reliability and accuracy.

This requires actively monitoring their decision making processes and gaining a deep understanding of how inputs influence outputs. The most effective way to achieve this is through robust monitoring tools that provide comprehensive insights to ensure AI agents consistently deliver optimal results.

Additional Resources

- Doc: How to Group LLM Requests with Helicone’s Sessions

- Doc: How to log Vector DB Interactions using Helicone’s Javascript SDK

- Guide: How to Optimize AI Agents by Replaying LLM Sessions

- Resource: 6 Open-Source Platforms and Framworks for building AI Agents

Questions or feedback?

Are the information out of date? Do you have additional platforms to add? Please raise an issue and we’d love to share your insights!